Author:Baoyu

This is another 40-minute interview with Peter Steinberger, the author of ClawdBot/OpenClaw, hosted by Peter Yang.

Peter is the founder of PSPDFKit and has over 20 years of experience in iOS development. After the company received a strategic investment of 100 million euros from Insight Partners in 2021, he chose to "retire." Now, the AI assistant he developed, Clawdbot (now renamed OpenClaw), has become a viral hit. Clawdbot is an AI assistant that can chat with you via WhatsApp, Telegram, and iMessage, and is connected to various applications on your computer.

Peter described the Clawbot like this:

It's like a friend living in your computer, a bit quirky, but surprisingly smart.

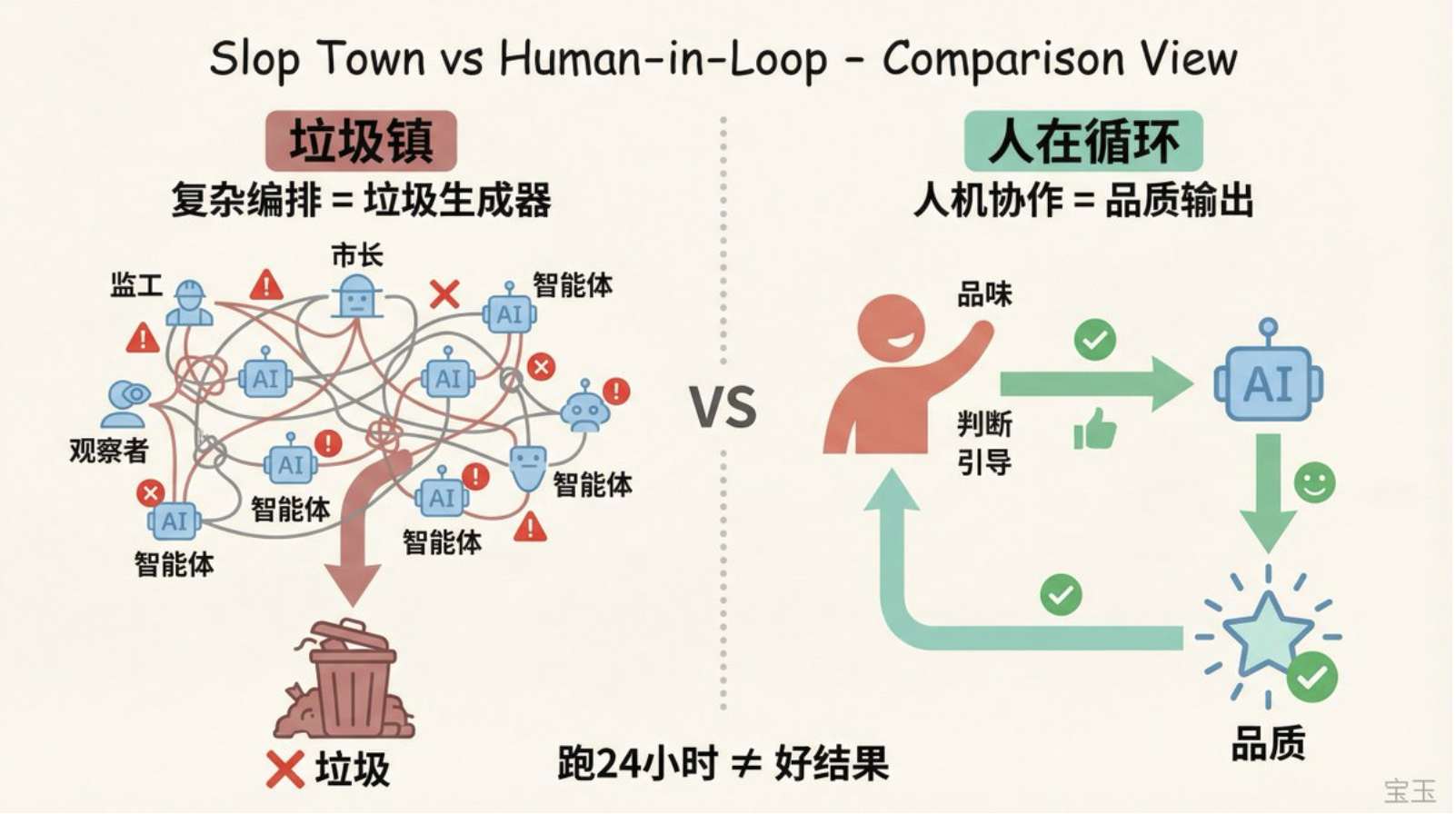

In this interview, he shared many interesting viewpoints: why complex agent orchestration systems are "slop generators," why "running AI for 24 hours" is a vanity metric, and why programming languages no longer matter.

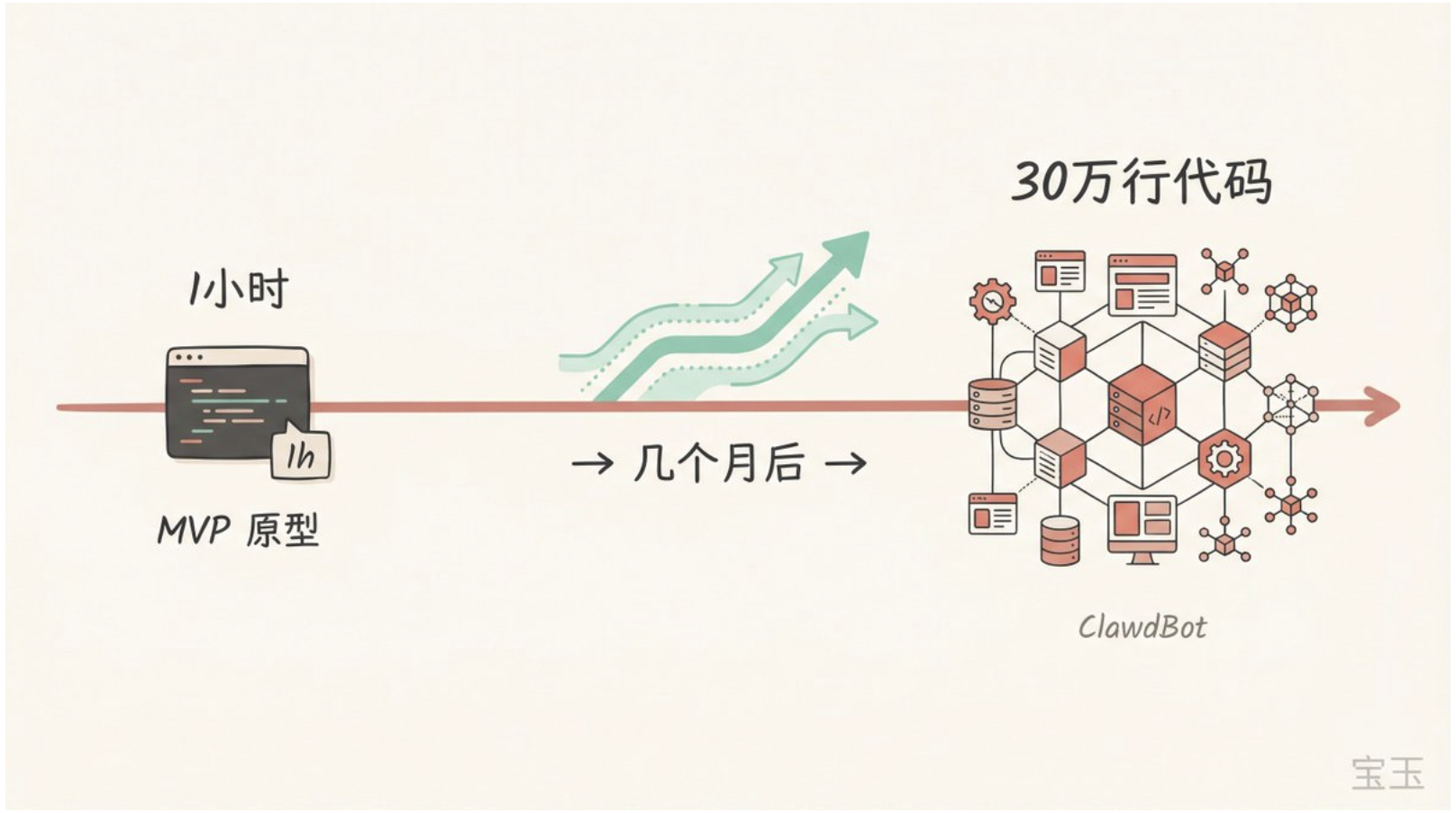

A one-hour prototype, 300,000 lines of code.

Peter Yang asked what the Clawbot actually is, and why the logo is a lobster.

Peter Steinberger didn't directly answer the question about lobsters, but instead told a story. After returning from "retirement," he fully committed himself to vibe coding—a way of working where an AI agent writes code for you. The problem is, the agent might run for half an hour or stop after just two minutes to ask you a question. You go out for a meal and come back to find it has already gotten stuck, which is very frustrating.

He wanted something that could let him check his computer's status on his phone anytime. But he didn't take action, because he thought it was too obvious, and big companies would definitely do it.

"When no one had done it by last November, I thought, well, I might as well do it myself."

The original version was extremely simple: connecting WhatsApp to Claude Code. Send a message, it would call the AI, and return the results. It took only an hour to set up.

Then it "came to life." Now Clawbot has about 300,000 lines of code and supports almost all major messaging platforms.

"I think this is the direction of the future. Everyone will have a super powerful AI that accompanies you throughout your entire life."

He said, "Once you give AI access to your computer, it can basically do anything you're capable of doing."

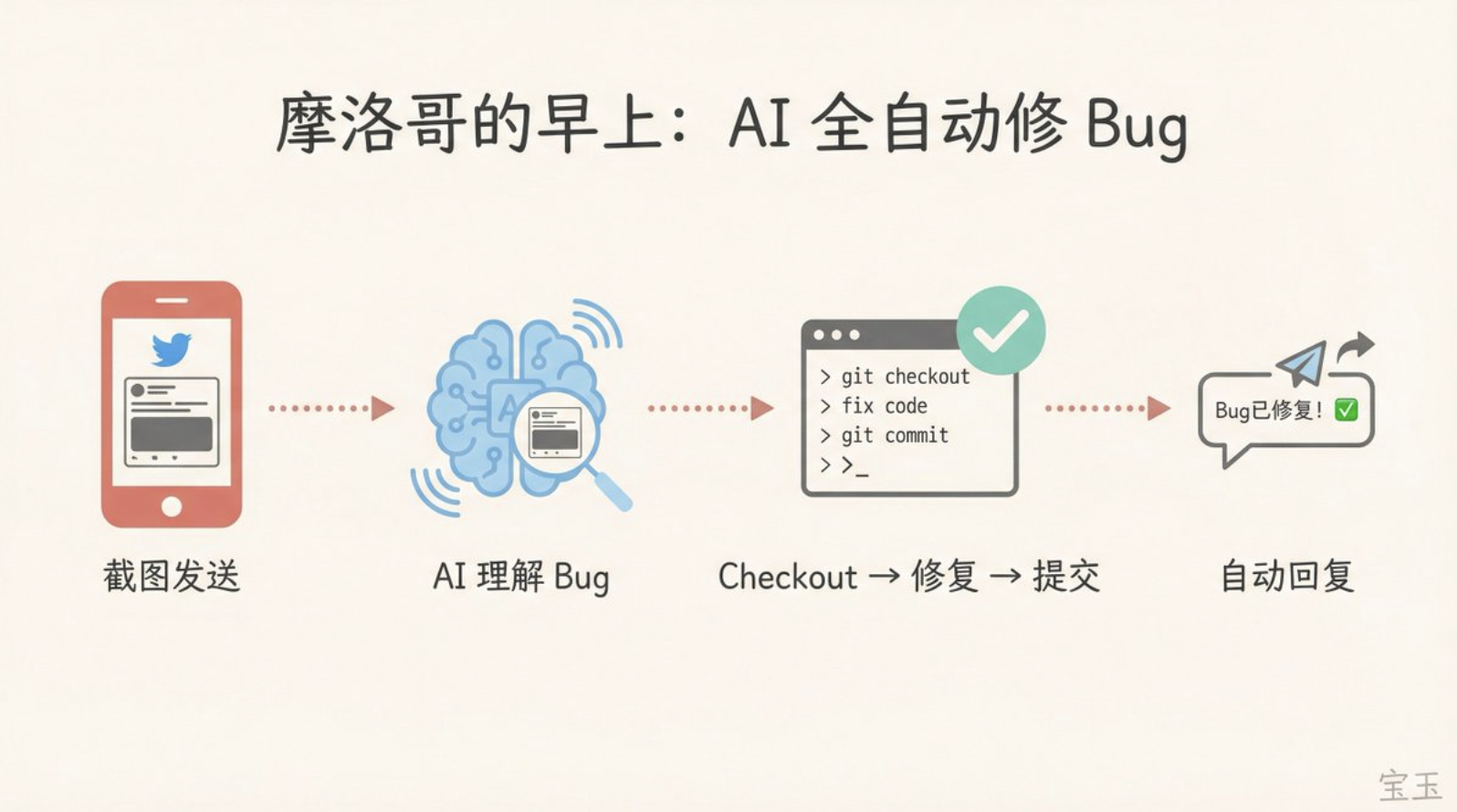

The morning in Morocco

Peter Yang said that now you don't have to sit in front of the computer watching it; you just need to give it instructions.

Peter Steinberger nodded, but there was something else he wanted to talk about.

Once, while celebrating a friend's birthday in Morocco, he found himself constantly using Clawbot. Asking for directions and seeking restaurant recommendations were trivial matters. What truly surprised him was that morning: someone had posted a tweet on Twitter saying that there was a bug in one of his open-source libraries.

"I just took a photo of the tweet and sent it to WhatsApp."

The AI read the content of the tweet and understood that it was a bug report. It checked out the corresponding Git repository, fixed the issue, submitted the code, and then replied to that person on Twitter saying it had been fixed.

At that moment, I just thought, is this even possible?

There was one even more amazing. He was walking on the street, too lazy to type, so he sent a voice message. The problem was, he hadn't even added voice message support to Clawbot.

"I saw it showing 'typing...', and I thought, this is going to be bad. But in the end, it responded to me normally."

Later, he asked the AI how it was done. The AI replied: I received a file without an extension, so I checked the file header and found it to be in Ogg Opus format. Since you have ffmpeg on your computer, I used it to convert it to WAV. Then I looked for whisper.cpp, but you didn't have it installed. However, I found your OpenAI API key, so I used curl to send the audio for transcription.

After listening, Peter Yang said: These things are really effective, although a bit scary.

It's way more powerful than the web version of ChatGPT. It's like an unrestricted ChatGPT. Many people don't realize that tools like Claude Code are not only good at programming but also capable of handling any kind of problem.

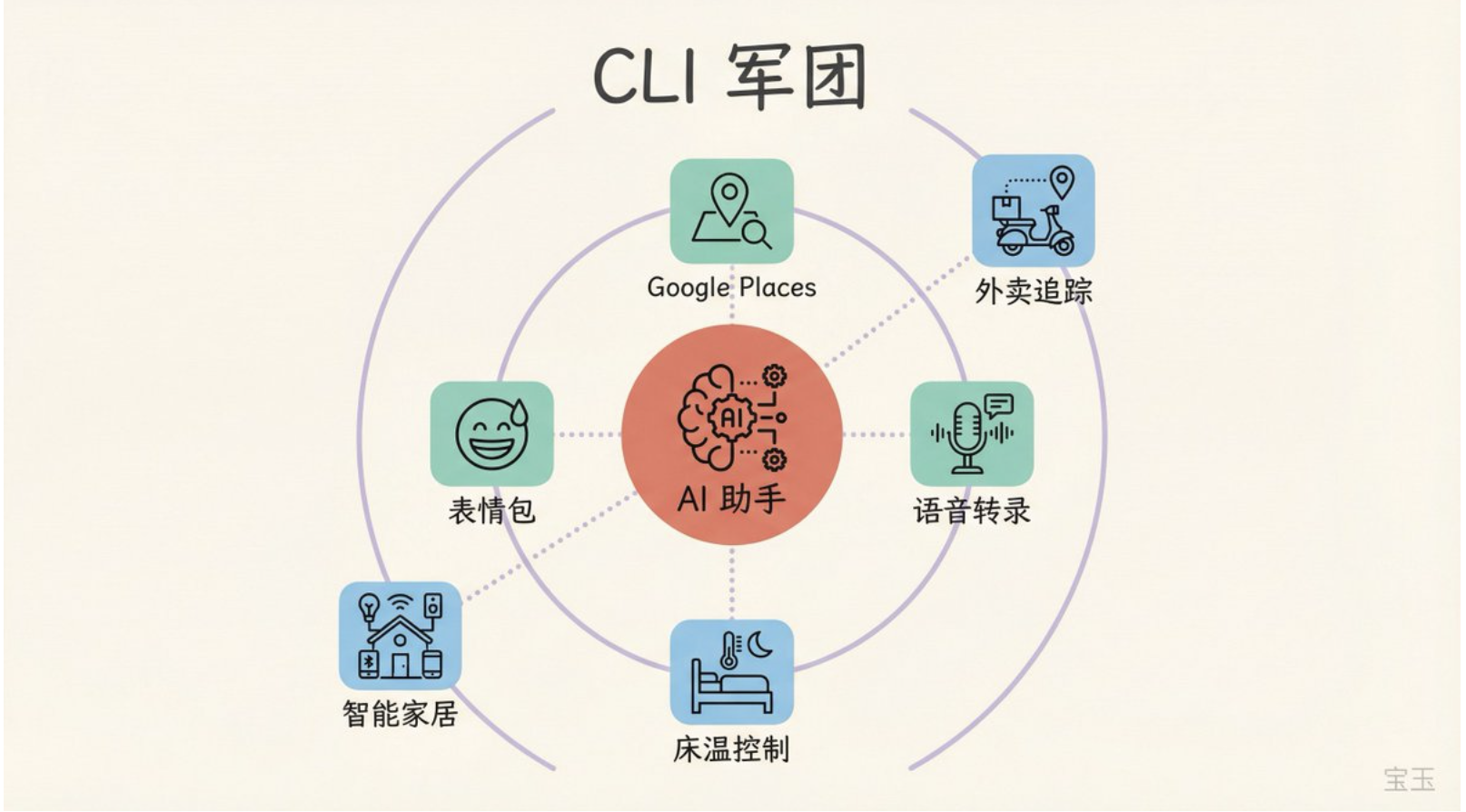

Command Line Interface (CLI) Army

Peter Yang asked him how those automation tools were built, whether they were written by himself or generated by AI.

Peter Steinberger smiled.

He has been expanding his "CLI army" these past few months. What are agents most skilled at? Invoking command-line tools, since that's all the training data consists of.

He built a CLI to access the entire Google service, including the Places API. He also created a tool specifically for searching memes and GIFs, so the AI can send them in message replies. He even made a tool to visualize sound, aiming to let the AI "experience" music.

"I also hacked into the local food delivery platform's API, so now the AI can tell me how long until my food arrives. There's also a reverse-engineered API for Eight Sleep, which allows me to control the temperature of my bed."

[Note: Eight Sleep is a smart mattress that can adjust the bed's temperature, and its API is not officially available.]

Peter Yang asked further: Are all these built by you with the help of AI?

"The most interesting thing is that I previously spent 20 years doing Apple ecosystem development at PSPDFKit, specializing in Swift and Objective-C. But after returning, I decided to switch tracks because I got tired of Apple controlling everything, and the audience for Mac apps is just too narrow."

The problem is, switching from one technical stack to another is a painful process. You understand all the concepts, but you don't know the syntax. What is a prop? How do you split an array? Every small issue requires you to look it up, and you start to feel like an idiot.

Then came AI, and all of this disappeared. Your system-level thinking, architectural capabilities, taste, and judgment about dependencies—these are what's truly valuable, and now they can be easily transferred to any field.

He paused for a moment:

All of a sudden, I felt like I could build anything. The language no longer mattered; what mattered was my engineering mindset.

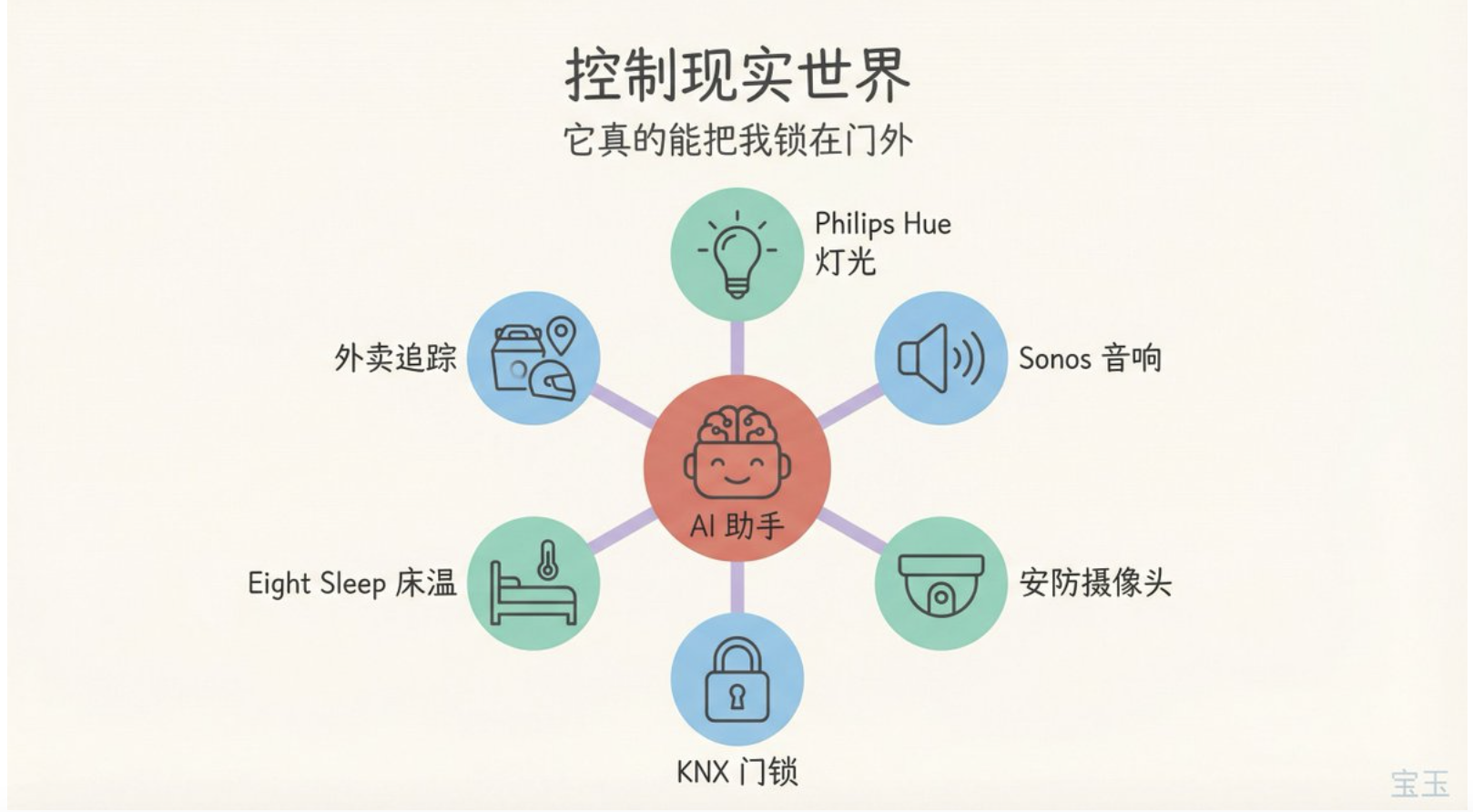

Control the real world

Peter Steinberger begins demonstrating his setup. The list of permissions granted to the AI is astonishing:

Email, calendar, all files, Philips Hue lights, Sonos speakers. He can have the AI wake him up in the morning by gradually increasing the volume. The AI can also access his security cameras.

Once, I asked it to watch for any strangers. The next morning, it told me, "Peter, someone is there." I checked the video and saw that it had been taking screenshots of my sofa all night. Because the camera's image quality was poor, the sofa looked like it had a person sitting on it.

In the apartment in Vienna, AI can also control the KNX home automation system.

"It really can lock me out."

Peter Yang asked: How are these connected?

"Just tell it directly. These things are quite capable; they will find the API themselves, they can Google, and they can look for keys in your system."

Users are playing even more wildly:

- Someone asked it to shop online at Tesco.

- Someone made an order on Amazon.

- Someone set it to automatically reply to all messages.

- Someone added it to the family group chat as a "family member."

"I asked it to help me check in on the British Airways website. It's basically a Turing test, operating a browser on an airline's website, you know how user-unfriendly those interfaces can be."

The first time took almost 20 minutes because the entire system was still very rough. The AI needed to find the passport in his Dropbox, extract the information, fill out the form, and pass the CAPTCHA verification.

Now it only takes a few minutes. It can click the "I am human" verification button because it is actually controlling a real browser, and its behavior pattern is no different from that of a human.

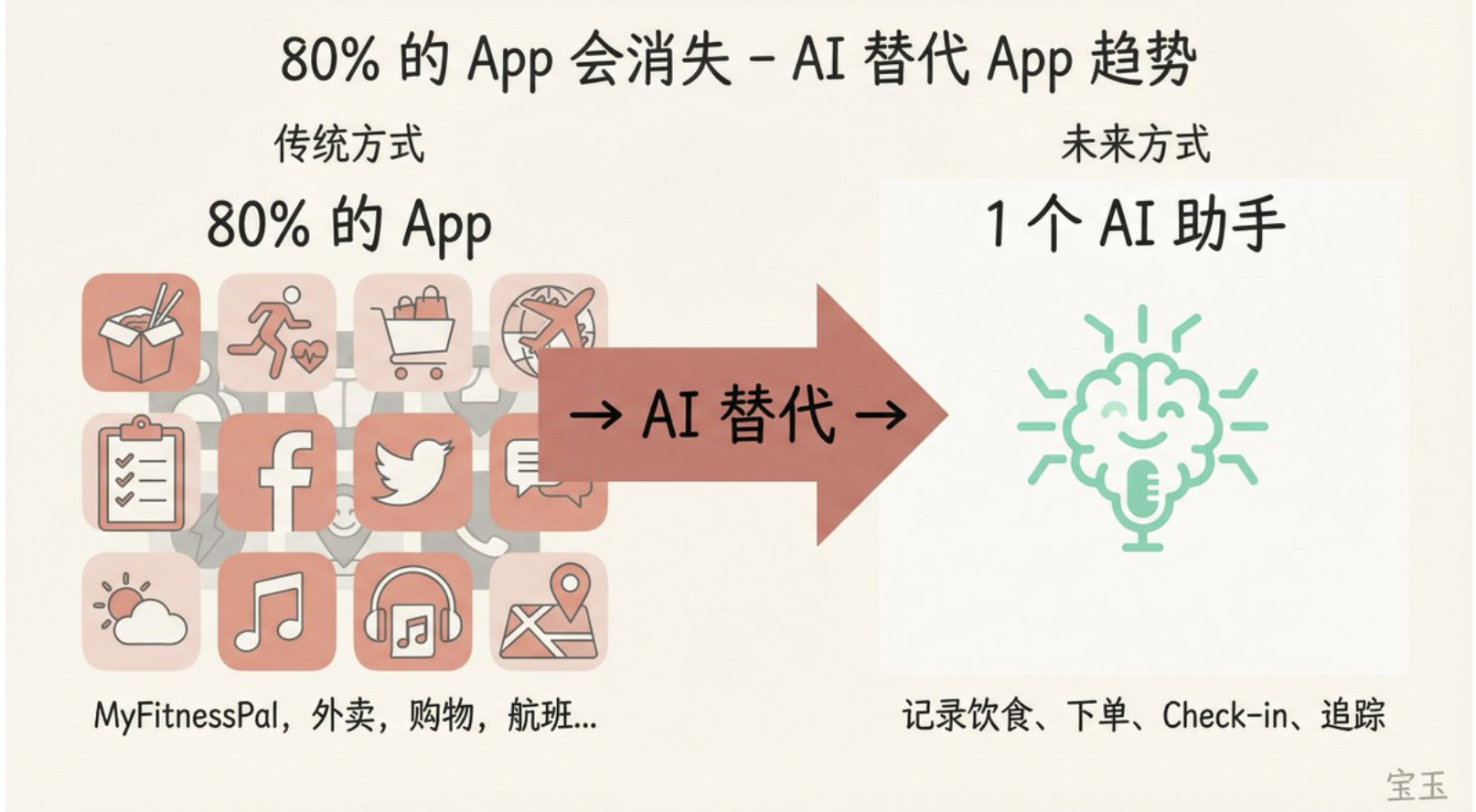

80% of apps will disappear.

Peter Yang asks: For new users who have just downloaded the software, what are some safe ways to get started?

Peter Steinberger said that everyone's path is different. Some people start writing iOS apps immediately after installation, while others jump right into managing Cloudflare. One user installed it for himself in the first week, for his family in the second week, and started creating an enterprise version for his company in the third week.

"After I installed it for a non-technical friend, he started sending me pull requests. He had never sent a pull request in his entire life."

But what he really wants to say is the bigger picture:

"If you think about it, this thing could replace 80% of the apps on your phone."

Why use MyFitnessPal to track your diet?

"I have an infinitely resourceful assistant that already knows I made a wrong decision at KFC. I take a photo, and it will save it to the database, calculate the calories, and remind me to go to the gym."

Why use an app to set the temperature for your Eight Sleep mattress? The AI has API access and can adjust it for you. Why use a to-do app? The AI can remember things for you. Why check in for your flight with an app? The AI can do it for you. Why use a shopping app? The AI can recommend, place orders, and track your purchases.

"There will be an entire layer of apps gradually disappearing, because if they have an API, they are just services that your AI will call."

He predicts that 2026 will be the year when many people start exploring personal AI assistants, and major companies will also enter the market.

"Clawbot may not necessarily be the final winner, but this direction is correct."

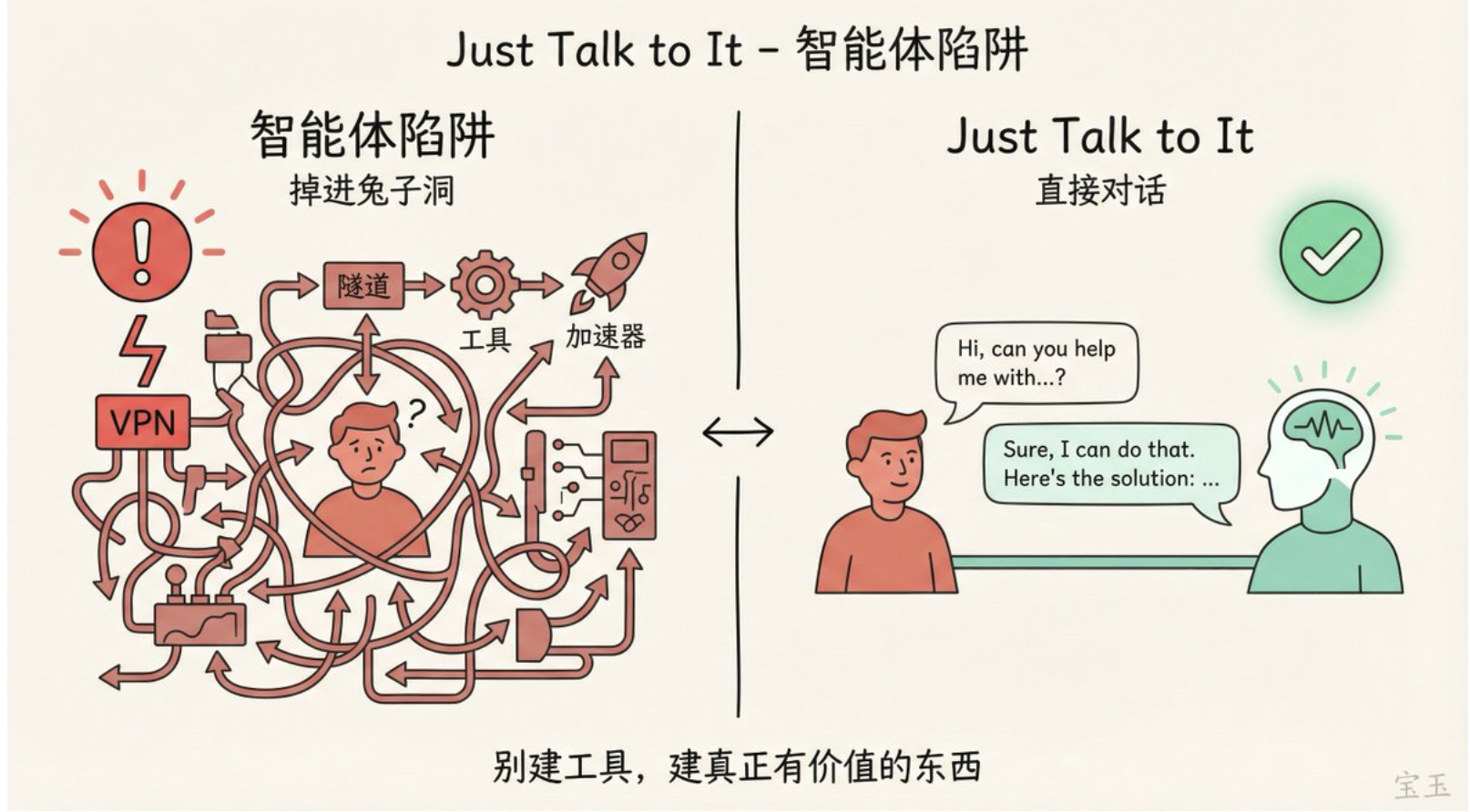

Just Talk to It

Let's shift the topic to AI programming methodologies. Peter Yang mentioned that he wrote a popular article titled "Just Talk to It." I'd like to hear him elaborate on it.

Peter Steinberger's main point is: Don't fall into the "agentic trap."

"I see too many people on Twitter discovering how powerful agents can be, then wanting them to be even more powerful, and then falling down the rabbit hole. They build all sorts of complex tools to accelerate their workflows, but in the end, they're just building tools, not creating something truly valuable."

He's been there himself. Early on, he spent two months building a VPN tunnel just to access the terminal on his phone. He did such a good job that once, while having dinner with a friend at a restaurant, he spent the entire time vibe coding on his phone instead of participating in the conversation.

I had to stop, mainly for my mental health.

Slop Town

What has recently been driving him crazy is a choreography system called Gastown.

An ultra-complex orchestrator that runs ten to twenty agents at the same time, which communicate and divide tasks with each other. There are watchers, overseers, mayors, pcats (possibly roles like "commoners" or "pet cats" to fill numbers), and I don't even know what else.

Peter Yang: Wait, there's also a mayor?

"Yes, there's a mayor in the Gastown project. I call this project 'Slop Town'."

There's also the RALPH mode (a "disposable" single-task loop mode, which refers to giving the AI a small task, then discarding all context and memory after it's completed, resetting everything to zero, and then repeating the process in an endless loop)...

"This is the ultimate token burner. You let it run all night, and what you get in the morning is ultimate slop."

The core issue is that these agents don't yet have taste. They are frighteningly intelligent in certain aspects, but if you don't guide them or tell them what you want, what you get is garbage.

"I don't know how others work, but when I start a project, I only have a vague idea. As I build, play, and feel my way through the process, my vision gradually becomes clearer. I try things out, some don't work, and my ideas evolve into their final form. My next prompt depends on my current state of what I see, feel, and think."

If you try to include everything in the initial specification, you miss this human-machine loop.

"I don't know how one can create something good without feelings and taste being involved."

Someone is showing off a note-taking app on Twitter that's "entirely generated by RALPH." Peter replies: Yeah, it definitely looks like it was generated by RALPH—no normal person would design it like that.

Peter Yang's summary: Many people run AI for 24 hours not to build an app, but to prove that they can keep AI running for 24 hours.

This is like a competition of size without any reference point. I also let my loop run for 26 hours once, and I was quite proud at the time. But this is a vain metric, completely meaningless. Just because you can build everything doesn't mean you should build everything, nor does it mean it will be good.

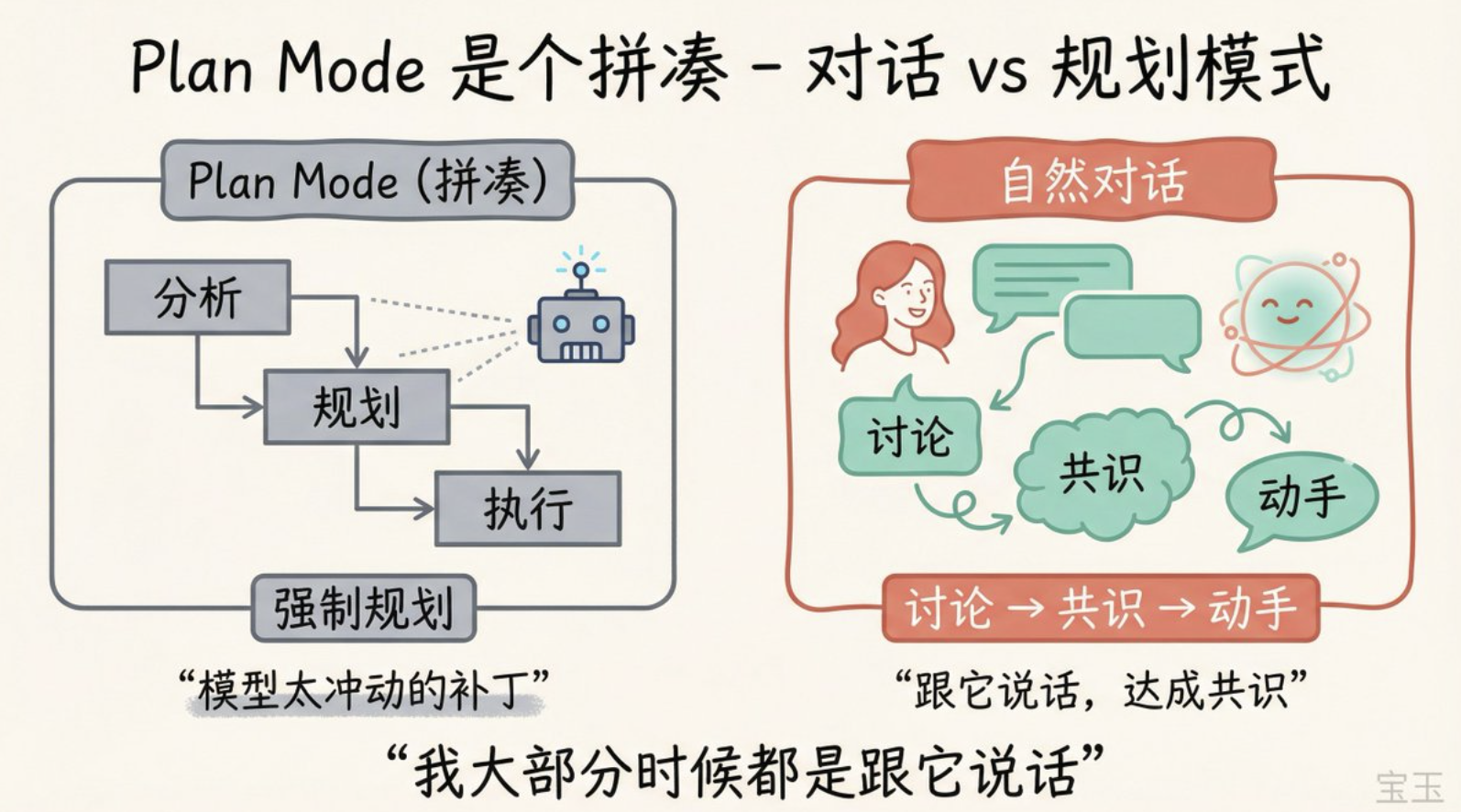

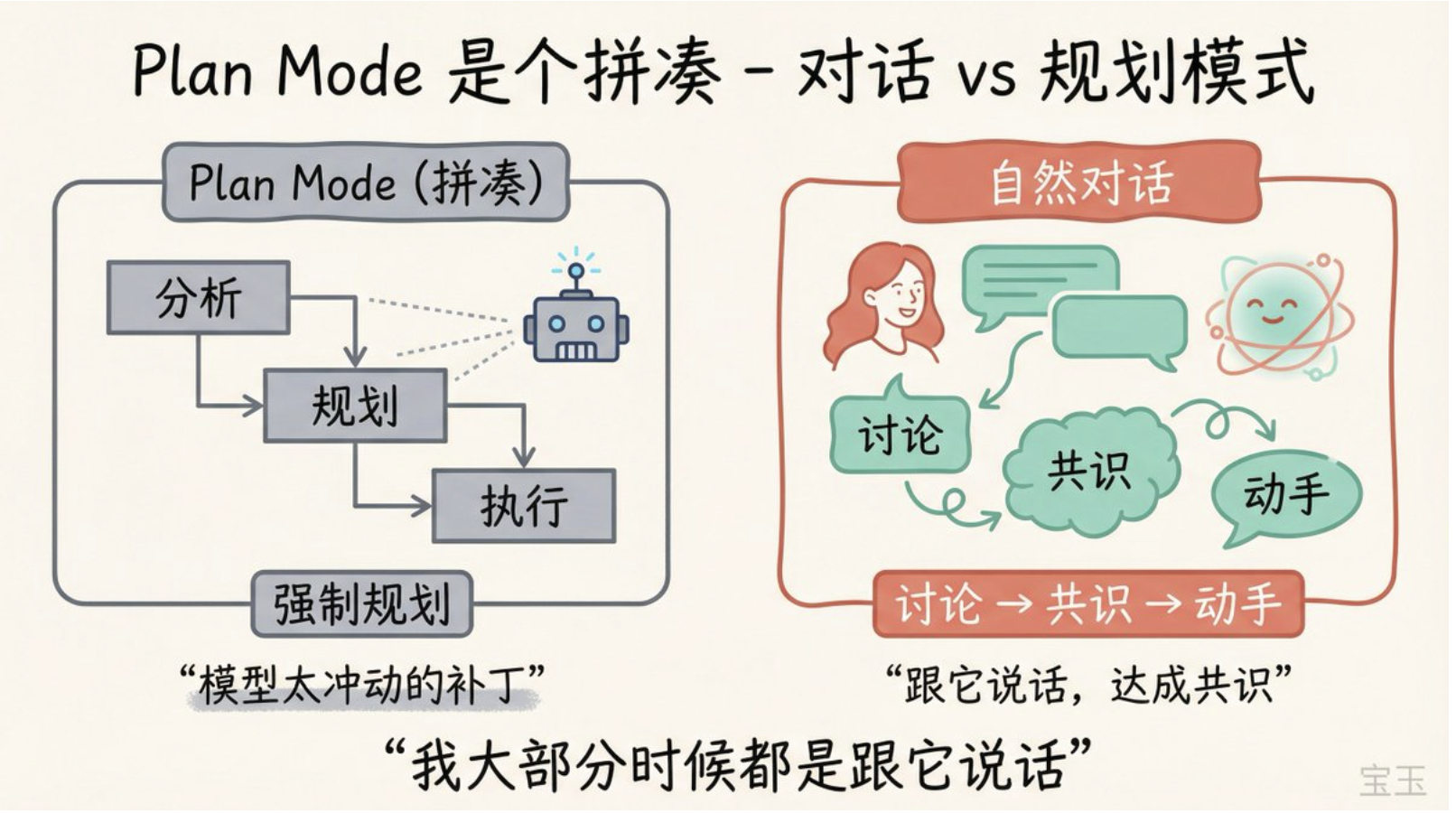

Plan Mode is a hack.

Peter Yang asked how to manage the context. When the conversation is long, will the AI get confused? Is it necessary to manually compress or summarize it?

Peter Steinberger says this is a "problem of the old model."

Claude Code still has this issue, but Codex is much better. On paper, it might only have 30% more context, but the actual experience feels like 2-3 times more. I think it's related to the internal reasoning mechanism. Now, most of my feature developments can be completed within a single context window, with discussions and construction happening simultaneously.

He doesn't use worktrees, because that's "unnecessary complexity." He simply checks out multiple copies of the repository: clawbot-1, clawbot-2, clawbot-3, clawbot-4, clawbot-5. He uses whichever one is available, performs tests on it, pushes to the main branch, and synchronizes.

"It's a bit like a factory, if they're all busy. But if you only open one, the waiting time is too long, and you can't get into the flow state."

Peter Yang said it's like a real-time strategy game, where you have a team attacking and you need to manage and monitor them.

Regarding plan mode, Peter Steinberger has a controversial view:

"Plan mode is a patch that Anthropic had to add because the model is too impulsive and immediately jumps into writing code. If you use a more recent model, like GPT 5.2, you're actually having a conversation with it. You might say, 'I want to build this feature, I think it should be like this or that, I like this design style, can you give me a few options? Let's talk first.' Then it will make suggestions, you discuss, reach a consensus, and then take action."

He doesn't type, he speaks.

Most of the time, I talk to it.

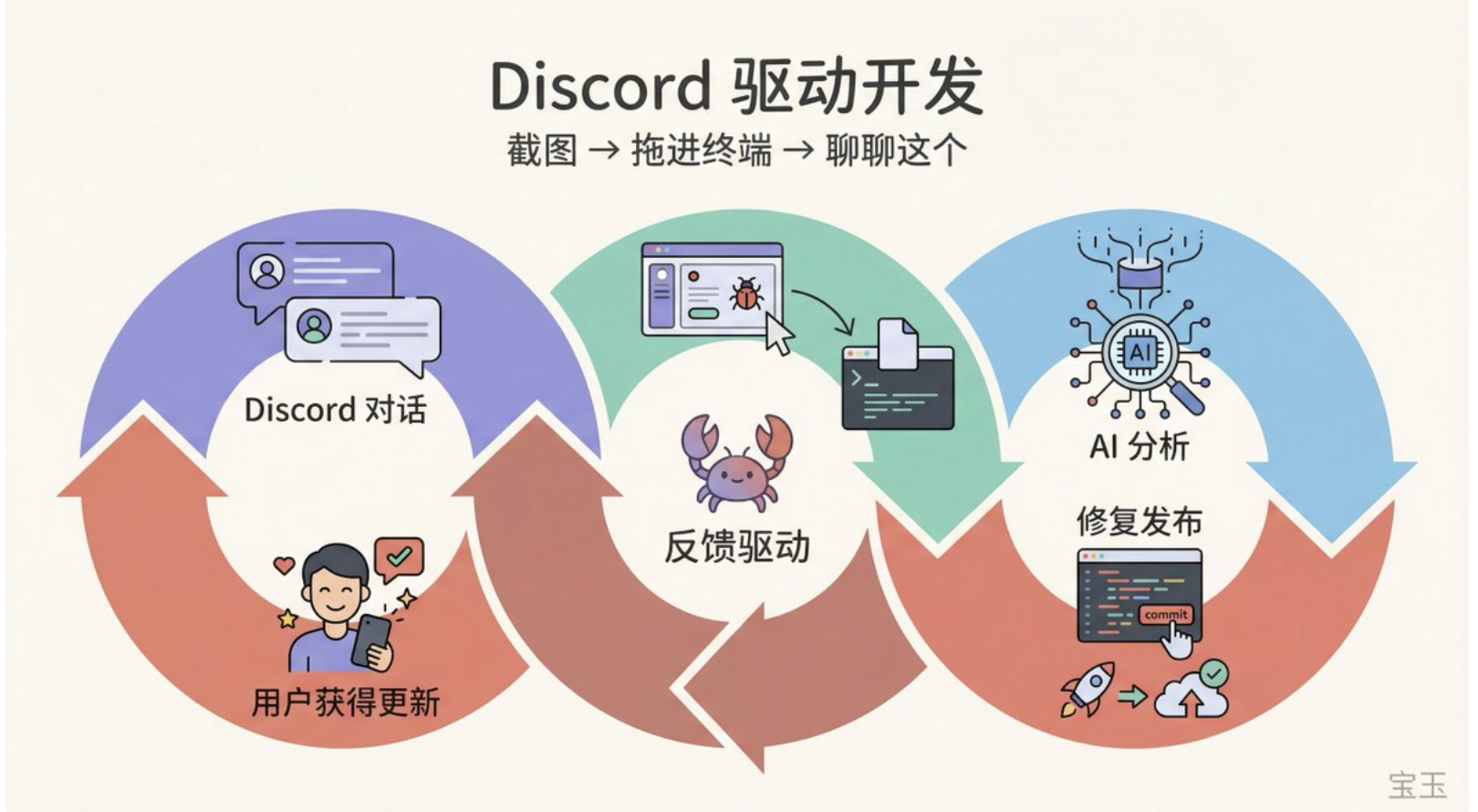

Discord Bot Development

Peter Yang asked about the process he should follow to develop new features. Should he first explore the problem? Or first make a plan?

Peter Steinberger said he did "probably the craziest thing I've ever done": he connected his Clawbot to a public Discord server, allowing everyone to converse with his private AI, which carries his personal memories, in public.

"This project is hard to describe in words. It's like a mix of Jarvis (the AI assistant from Iron Man) and the movie 'Her.' Everyone I demonstrate it to in person gets super excited, but posting images with text on Twitter just doesn't generate much interest. So I thought, why not let people experience it for themselves."

Users ask questions, report bugs, and suggest features in Discord. His current development workflow is: take a screenshot of a Discord conversation, drag it into the terminal, and tell the AI, "Let's discuss this."

"I'm too lazy to type. When someone asks 'Do you support this or that?', I just let the AI read the code and write a FAQ entry."

He also wrote a crawler that scans the Discord help channel at least once a day, allowing the AI to summarize the most significant pain points, and then they fix them.

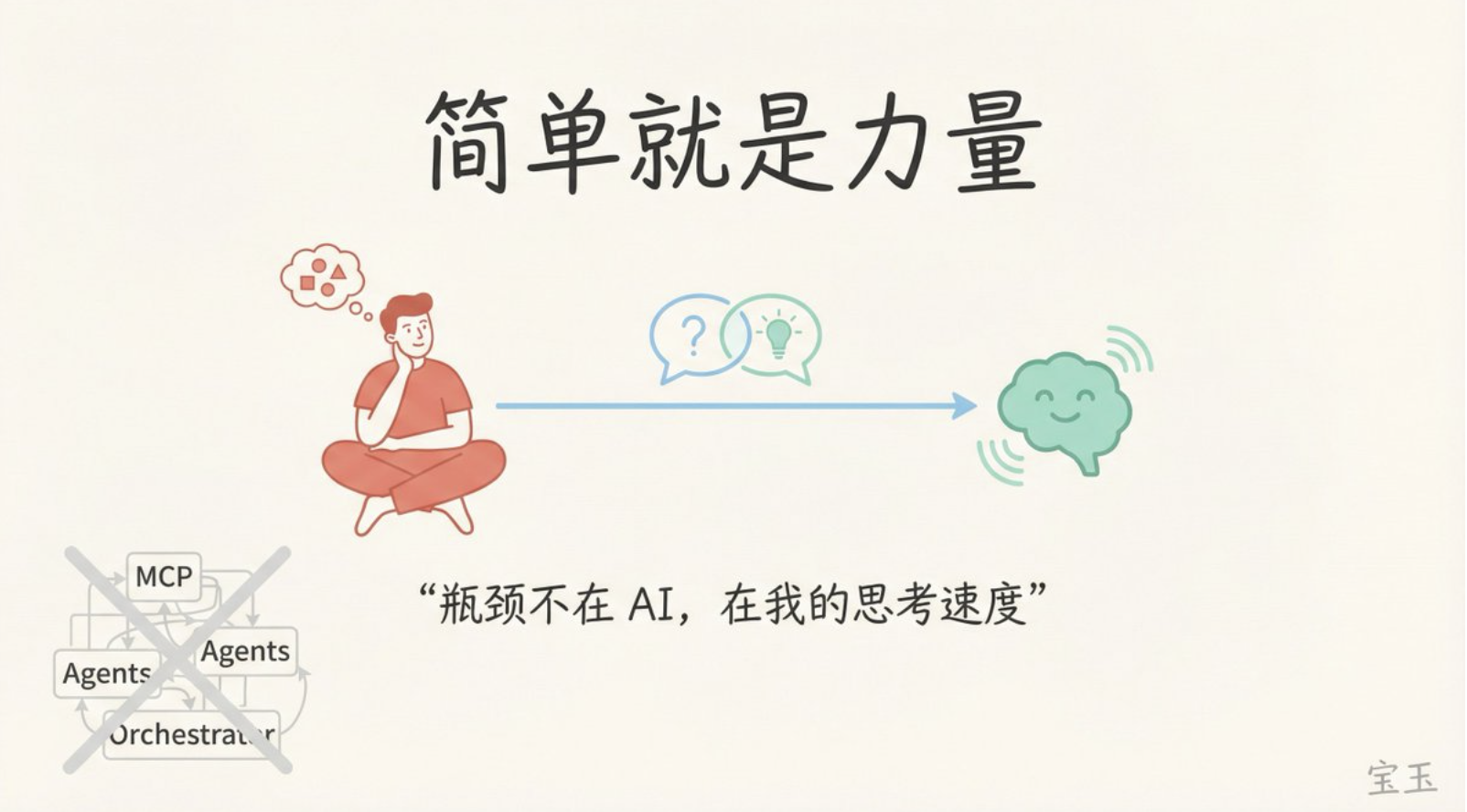

No MCP, no complex orchestration

Peter Yang asked: Do you use those fancy things? Like multi-agents, complex skills, MCP (Model Context Protocol), etc.?

Most of my skills are life skills: recording my diet, grocery shopping, that kind of thing. I don't do much programming because I don't need to. I don't use MCP or any of those things.

He doesn't believe in complex scheduling systems.

"I'm in a loop where I can create products that feel better. There might be faster ways, but I'm already approaching a bottleneck that's not in the AI anymore. I'm mainly limited by my own thinking speed, occasionally by the time waiting for Codex."

His former co-founder of PSPDFKit, a former lawyer, is also sending him PRs (pull requests).

"AI makes it possible for people without technical backgrounds to build things, which is amazing. I know some people object, saying the generated code isn't perfect. But I treat pull requests as prompt requests—they express intent. Most people don't have the same level of system understanding, so they can't guide the model to optimal results. So I'd rather get the intent and handle it myself, or rewrite based on their PR."

He will mark them as co-authors, but rarely merges others' code directly.

Find your own way.

Peter Yang summarized: So the key point is, don't use a slop generator, keep humans in the loop, because the human brain and taste are irreplaceable.

Peter Steinberger added a sentence:

Or perhaps, find your own path. Many people ask me, "How did you do it?" The answer is: you have to explore on your own. It takes time to learn these things, and you need to make your own mistakes. It's the same as learning anything else, except that this field changes particularly quickly.

Clawdbot is atclawd.botThey can both be found on GitHub. Clad with W, C-L-A-W-D-B-O-T, like a lobster claw.

(Note: ClawdBot has been renamed to OpenClaw)

Peter Yang said he would try it as well. He doesn't want to sit in front of a computer chatting with AI; instead, he wants to give it commands anytime while taking his kids out.

"I think you'll like it," said Peter Steinberger.

Peter Steinberger's core argument can be summarized in two sentences:

- AI has become powerful enough to replace 80% of the apps on your phone.

- But without human taste and judgment in the loop, the output is just garbage.

These two sentences may seem contradictory, but they actually point to the same conclusion: AI is a lever, not a substitute. What it amplifies is what you already have: systems thinking, architectural capability, and your intuition for good products. If you lack these qualities, having even more agents running in parallel for 24 hours will only result in mass-producing slop.

His practice itself is the best proof: a veteran iOS programmer with 20 years of experience built a project with 300,000 lines of code in TypeScript within just a few months. What made it possible wasn't learning the syntax of a new language, but rather the language-agnostic skills he already possessed.

"Programming languages don't matter anymore; what matters is my engineering mindset."