This week, the artificial intelligence (AI) giant Anthropic rolled out Claude Code Security, an AI-driven code-scanning tool that hunts vulnerabilities and drafts patches, jolting cybersecurity markets while raising pointed questions about jobs and industry power shifts.

Can Claude Code Security Replace Human Scanners?

Anthropic’s latest addition to its Claude Code platform arrives with a simple pitch: let AI read your entire codebase like a seasoned security researcher, then flag what others miss. According to the company’s release, Claude Code Security scans for vulnerabilities, suggests patches, and presents findings with severity and confidence ratings, while keeping humans firmly in the approval seat.

Unlike traditional static application security testing tools that rely on predefined patterns, Claude Code Security leans on advanced large language models (LLMs), including Claude Opus 4.6, to reason through how data flows and how components interact. That means it aims to catch business logic flaws and broken access controls that slip past rule-based scanners.

During internal testing, Anthropic said Opus 4.6 identified more than 500 high-severity vulnerabilities in production open-source codebases—some that had gone unnoticed for years. Those findings are undergoing triage and responsible disclosure, suggesting the tool’s ambitions extend beyond cosmetic fixes.

The workflow is structured for guardrails. After a comprehensive scan, the system performs self-verification, attempting to confirm or disprove its own findings before presenting them in a dashboard with suggested patches. No automated “push to prod” here—every fix requires human approval, at least for now.

Anthropic developed the capability over more than a year through its Frontier Red Team and tested it in cybersecurity competitions such as Capture the Flag events, alongside collaborations with institutions like Pacific Northwest National Laboratory. The tool is currently in a limited research preview for Enterprise and Team customers, with expedited access for open-source maintainers.

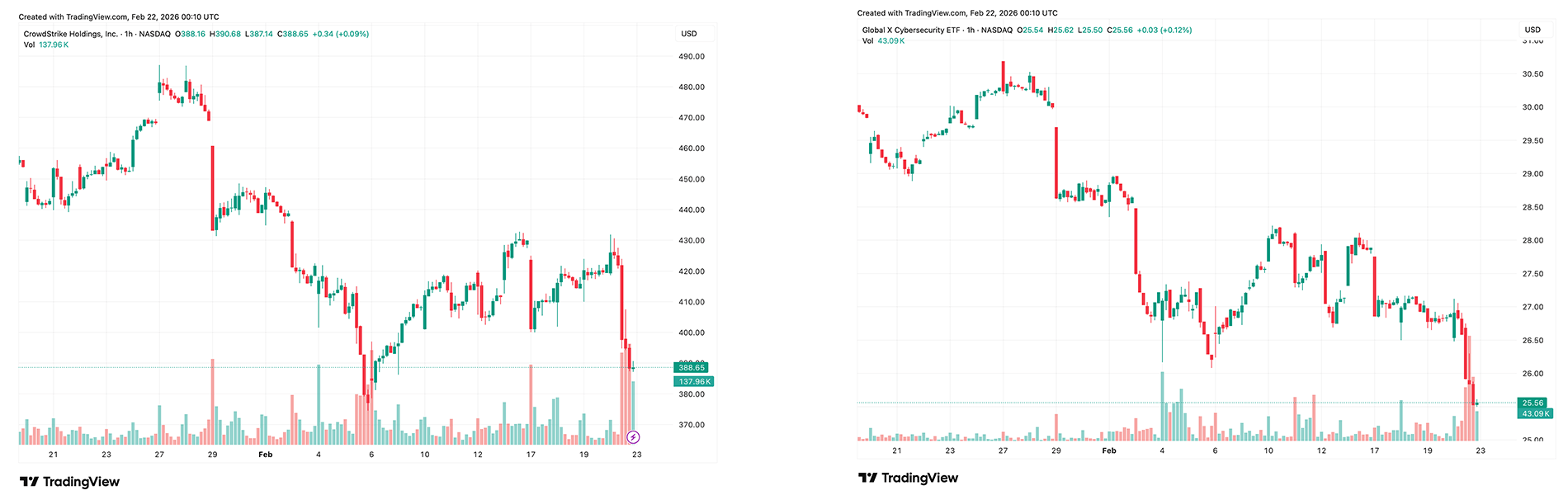

Wall Street, however, did not wait for the fine print. Shares of major cybersecurity firms slid sharply following the announcement, with companies including Crowdstrike and Cloudflare each dropping about 8%, while others such as Zscaler, Okta, and Gitlab also took hits. The broader Global X Cybersecurity ETF fell around 5%, reflecting sector-wide unease.

Some analysts characterized the reaction as headline-driven rather than structural, describing it as a “mini-flash crash” fueled by fears that AI could commoditize vulnerability detection. Others argue that the sell-off signals deeper concerns about how AI may reshape software security economics.

Online discussions, particularly on X, have amplified job anxieties. Posts warn that AI-powered scanners could “wipe out” roles in vulnerability assessment and remediation, especially in entry-level bug triage. In an industry already grappling with automation, the timing feels pointed.

Yet many experts offer a cooler take. Anthropic’s Logan Graham said, “I think if you’re AGI-pilled, you should care a lot about cybersecurity. Cyberphysical infrastructure is how AGI ‘reaches out into the world.’ That’s why we want Claude to secure it.” Graham added that Anthropic was “hiring for cybersecurity.” Many others framed it as Claude’s new ability was designed to help overwhelmed teams manage backlogs rather than replace them.

Crucially, Claude Code Security cannot perform runtime testing, send API requests, or validate exploitability in live environments, meaning dynamic testing and human oversight remain essential. The broader backdrop is hard to ignore. As AI accelerates both code generation and cyberattacks, defenders face adversaries that can probe systems at machine speed.

Anthropic frames its tool as a defensive equalizer, raising the baseline for secure development while acknowledging the dual-use nature of AI. In that sense, Claude Code Security may represent less of a pink slip generator and more of a role rewriter. Security professionals may find themselves spending less time combing through repetitive alerts and more time designing architectures, validating exploits, and steering AI-assisted workflows.

Whether the market tremor proves temporary or marks a structural shift will depend on adoption, integration with existing stacks, and all types of approaches to AI in critical infrastructure. For now, Claude Code Security has done something rare in cybersecurity: it made code review the center of a financial and labor debate.

FAQ ❓

- What is Claude Code Security?

It is an AI-powered tool from Anthropic that scans entire codebases for vulnerabilities and suggests human-reviewed patches. - Does Claude Code Security replace human security teams?

No, it requires human approval for fixes and cannot perform runtime testing, positioning it as an assistive tool rather than a replacement. - Why did cybersecurity stocks fall after the launch?

Investors reacted to fears that AI-driven vulnerability scanning could disrupt traditional security software business models. - Who can access Claude Code Security right now?

It is in limited research preview for Enterprise and Team customers, with expedited access for open-source maintainers.